The RED machine: Cryptanalysis by SIS

The RED Home Page

- Introduction

- Working

- History

- Genuine messages

- Procedures

- My Simulator

- Cryptanalysis

- SIS's Cryptanalysis (This page).

- Challenges

Introduction

In 1936, Frank B. Rowlett and Solomon Kullback (R & K), under the direction of William F. Friedman, succeeded in cracking the RED machine. Using only cryptograms collected by radio or telegraph, they not only succeeded in reconstructing the machine's operation but also in developing procedures to crack its current traffic.

This page describes the work of this SIS team (SIS: US Army Signal Intelligence Service), based primarily on Rowlett's memoirs (see References).

Note: Rowlett's verbatim text is shown in italics.

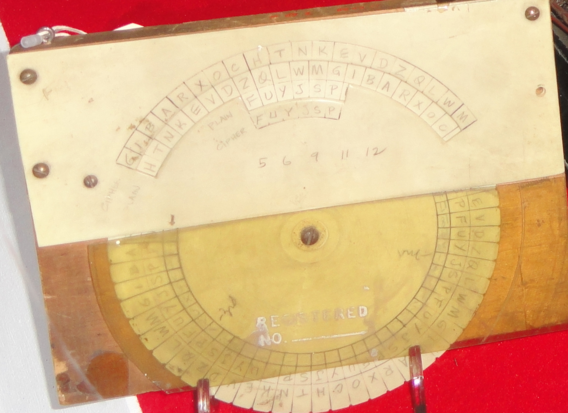

| Fig 1: The RED machine analog in paper |

Investigations begin

Original text

[p 109] Working under Friedman’s general direction, Kully and I started our study of the five-digit traffic by applying the cryptanalytic tests we had successfully employed in our work on the cipher machine systems which we had solved in out training course. Our application of these tests were greatly facilitated because we were able to use IBM accounting equipment. ...

Five-digit traffic

In 1930, Friedman formed a team of cryptanalysts who would become famous: Rowlett, Kullback, and Sinkov. After theoretical training on various cryptographic systems, including cipher machines, they began working on genuine encrypted messages, with priority given to Japanese messages.

These messages were divided into several groups. What distinguished these groups was their origin and the message headers. Friedman's team assumed (correctly) that each group of messages was encrypted by the same system.

In 1936, R & K began studying a batch of Japanese messages from Japanese embassies, the first group of which consisted of five digits. Here is an example of the beginning of a message (see the genuine messages page):

02143 JKTRY VLBZW DVJDO LVQDZ XXCNZ FKUQY BRRIX …

Statistical Analysis Methods

K & R, during their training in cryptanalysis under Friedman's direction, learned several statistical methods. A priori, I think these are, first of all, frequency analyses for one letter, two letters (bigrams), three letters (trigrams); the calculation of the coincidence index (IC) for each letter or group of letters (2, 3, 4, etc.); the calculation of IC in relation to the superposition of messages; the study of repetitions (from groups of four to several letters); and finally, Friedman's interval method (see below).

From these preliminary analyses, it is clear that the RED system is a substitution cipher. If we had had a code system, we would have had repetitions (even if it were overciphered); if we had a transposition, we would have had the preservation of the frequencies of Japanese letters.

Using IMB machines

In 1931, the SIS consisted of only six people: Friedman, his secretary, and four young cryptanalysts (Rowlett, Kullback, Sinkov, Hurt). To help them renew the War Department's ciphers, the SIS used IBM punchcard machines (see References).

Although initially, IBM punchcard machines were used for code books making, they quickly began to be used in cryptanalysis.

These machines were used for the following operations:

- Statistics on a text (plaintext or ciphertext): calculating the frequency of letters, bigrams, trigrams, and words in a text.

- Creating indexes of groups of letters appearing in a ciphertext.

- Searching for repetitions appearing in different texts.

- Placing two texts in depth.

The breaking of Japanese codes was made possible largely thanks to the intensive use of these machines, both by the SIS and the Navy.

WARNING! Although the capabilities of IBM machines seem extraordinary, the operations required extensive manipulation of the punched cards. Sorting, for example, required the punched cards to be placed several times in the sorting machine to complete the sorting.

Over the years, IBM machines evolved. From simple machines performing simple operations (sorting, selection, etc.), they were able to become, through programming, the ancestors of modern computers and increasingly adapted to the demands of cryptanalysts. From my perspective, when I read Rowlett's memoirs, I feel as if I'm reading the memoirs of the first computer scientist.

First breach in the system: the Sixes and the Twenties

Original Text

[p 109] As our first cryptanalytic test, we decides to make an extensive search for ciphertexts repetitions (five or more letters), for if we could establish that portions of the ciphertexts were repeated either within a message or between two different messages...

[p 110] In the set of long messages we first processed, we found no repetitions of cipher letters which seemed to have any significance. ...

But we did discover an important phenomenon from the monoliteral frequency counts of certain of the messages : six of the cipher letters stood out clearly, because they were either significantly higher or lower in frequency than the remaining twenty letters of the alphabet.

This discovery was so interesting to us that we stopped our search for repetitions and began to make monoliteral frequency counts of all the long messages we had received during the past few months. We soon established that the frequency characteristics remained constant for a period of ten days, and that all messages originating within that period showed the same high- or low-frequency letters. ...

[p 109] From the results of these studies we were able to confirm that messages of each key period which carried identical five-digit groups were encipehred in the same key. ...

[p 111] In the cryptographic texts of the earliest intercepts the six vowels accounted for almost exactly half of the ciphertext letters ; in the later intercepts this striking parity between the frequency of vowels and consonants was lost ...

Reconstructed Examples of the Sixes/Twenties

To illustrate Rowlett's point, using Google Translate, I translated an English text of approximately 2,000 characters into Roma-ji. Then I ciphered it using two versions of the Plugboard, one in which the Sixes are a minority, and the other in which the Sixes are a majority. Obviously, this difference stems from the presence of frequent or infrequent letters in the Sixes.

The 10 most frequent letters in Japanese (roma-ji): A: 13%, O: 12%, I: 11%, N: 9%, S: 7%, T: 7%, U: 7%, E: 7%, K: 6%, R: 5%.

An example in which the Sixes (QKDLTJ) are infrequent:

$ python3 red_tui.py -G QKDLTJPIXRONYVEHZUMSBWCFGA < wiki_en_romanji.txt > wiki_OZAJNB $ python3 histogram.py wiki_OZAJNB A 98 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * B 93 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * C 97 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * D 62 0.03: * * * * * * * * * * * * * * * * * * * * * * E 114 0.05: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * F 97 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * G 93 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * H 106 0.05: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * I 96 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * J 76 0.03: * * * * * * * * * * * * * * * * * * * * * * * * * * * K 55 0.02: * * * * * * * * * * * * * * * * * * * L 57 0.02: * * * * * * * * * * * * * * * * * * * * M 106 0.05: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * N 95 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * O 93 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * P 94 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * Q 56 0.02: * * * * * * * * * * * * * * * * * * * * R 96 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * S 109 0.05: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * T 54 0.02: * * * * * * * * * * * * * * * * * * * U 100 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * V 100 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * W 91 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * X 91 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * Y 93 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * Z 95 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * *An example in which the Sixes (OZAJNB) are very frequent:

$ python3 red_tui.py -G OZAJNBMGHLPIDKSCQTWRYXEFUV < wiki_en_romanji.txt > wiki_OZAJNB $ python3 histogram.py wiki_OZAJNB A 142 0.06: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * B 135 0.06: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * C 66 0.03: * * * * * * * * * * * * * * * * * * D 74 0.03: * * * * * * * * * * * * * * * * * * * * * E 74 0.03: * * * * * * * * * * * * * * * * * * * * * F 59 0.03: * * * * * * * * * * * * * * * * G 71 0.03: * * * * * * * * * * * * * * * * * * * * H 89 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * I 72 0.03: * * * * * * * * * * * * * * * * * * * * J 144 0.06: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * K 91 0.04: * * * * * * * * * * * * * * * * * * * * * * * * * L 75 0.03: * * * * * * * * * * * * * * * * * * * * * M 71 0.03: * * * * * * * * * * * * * * * * * * * * N 144 0.06: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * O 135 0.06: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * P 72 0.03: * * * * * * * * * * * * * * * * * * * * Q 64 0.03: * * * * * * * * * * * * * * * * * * R 84 0.04: * * * * * * * * * * * * * * * * * * * * * * * S 71 0.03: * * * * * * * * * * * * * * * * * * * * T 74 0.03: * * * * * * * * * * * * * * * * * * * * * U 74 0.03: * * * * * * * * * * * * * * * * * * * * * V 62 0.03: * * * * * * * * * * * * * * * * * W 83 0.04: * * * * * * * * * * * * * * * * * * * * * * * X 80 0.03: * * * * * * * * * * * * * * * * * * * * * * Y 68 0.03: * * * * * * * * * * * * * * * * * * * Z 143 0.06: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * *Note: Based on the examples I've given, you might think I've cheated to make it look easy to discern the distribution of letters between Sixes/Twenties. In fact, this distribution was easy to discover because the plugboard was valid for a period of 10 days! Thus, statistics could be accumulated on several thousand or even tens of thousands of characters. Even if Sixes were slightly more or less frequent than other letters, this difference appeared.

The 5-digits group

R & K claim that messages with the same indicator were encrypted with the same key. How do they make this deduction? It's simple: they superimpose the messages (in-depth) and calculate the number of coincidences. This value divides by the length of the smallest message, is equal to IC (Index of Coincidence invented by Friedman): a high value indicates that the messages were indeed encrypted with the same key.

In the following example, we have two messages (msg_1.txt and msg_2.txt). We encrypt the first message (msg_1.cry). We also encrypt the second message, but the first time with the same key used to encrypt the first message (msg_2a.cry) and a second time with a different key (msg_2b.cry). If we calculate the IC for the pair using the same key, we obtain a large IC of 0.062. On the other hand, if the keys are different (for msg_1.cry and msg_2b.cry), we obtain a low IC (0.027).

$ fold -w50 msg_1.txt KONOANGOOWATTSUNODOKURITSUSHITASAGYOOGURUUPUNIYOTT EKAIDOKUNISEIKOOSHIMASHITAIGIRISUGASAISHONIKAIDOKU NISEIKOOSHITANOWAHYUUFOSUTOORIBAASUTOREICHIIGANENN IKAIDOKUSHIHARORUDOKENWAASHIINOKOOBOOGANENGONISONO REPURIKAJMASHINOSEISAKUSHITAIGIRISUDESHITAAMERIKAN IYORUKAIDOKUNOKOKOROMIWANENMADEMATAREMASHITARIKUGU NNOSHISUGURUUPUDEWAFURANKUROURETTOTOSOROMONKARUBAK KUGAKAIDOKUSHIMASHITAKAIGUNDEWAAGUNESUDORISUKORUGA KAIDOKUNISEIKOOSHITATOIPPANTEKINIKANGAERARETEIMASU KANOJOWAJISSAINIWAKAIGUNBUKANGASHIYOOSHITEITAORENJ IANGOOMATAWAMANGOOOKAIDOKUSHIMASHITAGATSUNOSHISUTE MUWAHONSHITSUTEKINIONAJIDEARUKOTOGAHANMEISHIMASHIT A $ fold -w50 msg_2.txt AMERIKAHITOWAKAIDOKUOJINSOKUKASURUTAMENIREPURIKAMA SHINMOKOOCHIKUSHIMASHITAKONOMASHINNIWABOINTOSHIINO BETSUBETSUNIHODOKUTAMENOTSUNOHAAFUROOTAAGAARIMASHI TASHISUGURUUPUWATOOSHOKOREOTANNINIHONNOANGOOMASHIN TOYONDEIMASHITAGASONOYOONASETSUMEITEKINAYOOGOWASEK YURITIJOONORISUKUGAARUTOHANDANSHIMASHITAKOREWAKAID OKUSARETASAISHONONIHONNOKIKAIANGOODEATTATAMESUPEKU TORUNOSAISHOKARAHAJIMERUKOTONISHIredDOTONADZUKEMAS HITA $ python3 red_tui.py -G PZIFXNUAERSHBGVDTYMKCOWQLJ \ > -W 1,1,1 -P 01100111101 < msg_1.txt > msg_1.cry $ python3 red_tui.py -G PZIFXNUAERSHBGVDTYMKCOWQLJ \ > -W 1,1,1 -P 01100111101 < msg_2.txt > msg_2a.cry $ python3 red_tui.py -G PZIFXNUAERSHBGVDTYMKCOWQLJ \ > -W 10,3,11 -P 01100111101 < msg_2.txt > msg_2b.cry $ python3 IC_2txt.py msg_1.cry msg_2a.cry IC: 0.062 $ python3 IC_2txt.py msg_1.cry msg_2b.cry IC: 0.027

Conclusion

From the very beginning of their study, R & K, under Friedman's guidance, made a first crack in the RED cryptographic system (they did not yet know that it was produced by a cipher machine):

- The system appears to be a system of substituting letters in Japanese messages expressed in roma-ji (the usual system used in telegraphy) with two subsets: Sixes and Twenties (6/20 letters).

- This Sixes/Twenties separation corresponded to the Vowel/Consonant separation in the first messages.

- Part of the key is used for a period of 10 days.

- The first cipher group specifies not only the use of the RED system, but also the remainder of the key used.

Analysis from partial plain texts

Choice of study material

[p 119] In searching through the intercepts from the Far Eastern Diplomatic Net, I had come across an exceptionaly long message … There were also two other very long messages which had been transmitted over the same net and which bore the same date as the unusually long message. If our theories about the system were correct, all three of the messages would have to be enciphered in the same key period. Another favorable consideration was that they had been intercepted early in the use of the system when the six vowels comprised roughly fifty percent of the ciphertext, and we had theorized that if each vowel in the ciphertext represented a vowel in the plaintext.

Analysis

From the study of cipher machines he conducted during his apprenticeship under Friedman, Rowlett learned one thing: if you have both the plaintext and the ciphertext, you can deduce the algorithm that allows you to move from one to the other.

roma-ji has many statistical biases that make it almost easy to find several words or expressions based solely on knowledge of vowels. This is what R & K did.

Then, thanks to the statistical interval test, R & K were able to formulate hypotheses that corresponded to the test results and the reconstructed pieces of plaintext.

Note: The statistical interval test was created by Friedman when he analyzed the Hebern machine. This test remained classified for over 70 years. Its principle is simple: the frequency of letters is measured at a particular distance (interval): a distance of 1, 2, 3, etc. For example, we take the letter A and calculate the frequency of the pair A.O (O 3 apart from A), the letter separating A and O being any letter. NSA document A67330 (see Reference) explains the use of this test to verify the supposed operation of the RED machine.

[p 117] I repeated (to Kully) my conviction that if we could accurately develop a few phrases of plaintext for some of the ciphertext passages we certainly would be in a better position to evaluate the statistical findings. ...

In this form (telegraphic) of Japanese certain limitations applied to the letter Y ; it was always followed by one of the other vowels, most frequently by O or U, very infrequently by A, almost never by E or I. Pairs of vowels frequently occurred, and the most common were the combinations OO, UU, AI and EI in about that order of frequency. YUU and YOO often occured, preceded by a consonant, in combinations such as RYOO, TYUU, KYOO and KYUU. ...

[p 118] We found that we could also use the vowel arrangement to make tentative decipherments of the pairs and triplets of vowel in the ciphertext for which we had made no assumptions, and we were delighted to find that in almost all of these cases our decipherment produced acceptable plaintext.

As we continued to apply this process to the ciphertext of the message we were studying, we came across a combination of cipher letters which when deciphered produced OYOBI, which is the Japanese word for English AND. ...

It did not take us much longer to establish the pattern followed in the displacement of the vowel arrangement. Once this had been determined, we were able to decipher accurately all the vowels of the message.

Discovery of the Reverse mode

[p120] Our next task was to decipher the third message. We applied the techniques we had used for the second message, but without success. When we recalled that one of the vowel arrangements I had recovered form my earlier work on the three messages was the exact reverse of the arrangements developed for the other two messages, we checked to determine which of the three messages produced the reversed arrangement. When we found that the reversed sequence applied to the message we were studying, we promptly reversed the vowel and consonant sequences and applied them to the next of the third message. When we did this, it required only a little manipulation of the starting points of the sequence to produce valid plaintext Japanese. After deciphering a fairly long passage of ciphertext, we found that a third stepping pattern of forty-three elements ad been employed. It was also within the range suggested by Wenger.

Conclusion

Ultimately, R & K understand the basic operation of the RED system: there are two sets of letters (the Sixes and the Twenties) which are each mixed and which shift, in one direction or the other (but in a constant manner for the same message), with each encryption or decryption operation.

[p 119] While we ate lunch, we reviewed what we had learned about the system. There seemed to be two subsequences, one for the vowels and one for the consonants. Apparently these subsequences provides for independent encipherment of the vowels and consonants; a vowel would always be replaced by a vowel and a consonant by a consonant. Evidently the two subsequences operated in synchronism, both advancing with the encipherment of each letter of the message whether it was a vowel or a consonant. There also appeared to be a motion pattern which controlled the advancement of the two subsequences, introducing additional steps at certain points. We had recovered the vowel arrangement and the stepping pattern used for it. If our assumptions regarding the synchronous operation of the two subsequences were correct and if the same stepping pattern was used also for the consonants subsequence, all that was left for us to recover was the arrangement of the consonants. We felt this could be easily accomplished, ...

"More than forty ad less than fifty"

Introduction

Shortly after R & K began their investigations, Wenger (Cryptanalyst of the Navy) informed Friedman that the RED machine might have a cycle length of between 40 and 50. This information was of little use to K & R except when they noticed anomalies in the decipherment of the Sixes.

[p 112] When Friedman stated that he believed the system was based on a cipher machine, Wenger told him that some months earlier the Navy had recovered a Japanese naval cipher system which used an electromechanical cipher machine employing Japanese kana instead of the Roman alphabet (roma-ji). Friedman naturally pressed Wenger for more information about the system, but Wenger declined to provide it. ...

[p 113] [During a new meeting between Friedman and Wenger] ... Wenger had reluctantly confirmed that the kana sequence employed in the Japanese navy device had likewise been separated into two subsets [what R&K already knew]. ... When Friedman pressed for further details, Wenger finally suggested that a search for a basic cycle of "more than forty and less than fifty". ... After spending about three weeks analysed a number of key periods without finding anything which supported Wenger’s suggestion, we came to the conclusion that we had not developed the proper techniques for detecting it.

R & K rediscovers 40/50 cycles

After understanding how vowel encryption works, R & K rediscover the 40/50 cycles

[p 118] ... After several letters had been deciphered, the resulting plaintext would lose all its resemblance to proper Japanese, and additional displacements had to be introduced before the deciphered letters again assumed the appearance of good Japanese. When we recognized the effect of the additional displacement and took it into account, we were able to convert the vowels of long passages of ciphertext into acceptable plain language by a judicious insertion of an additional shift of one or two positions at the point where the decipherments lost its plaintext qualities.

[p 119] The stepping pattern which we had recovered for deciphereing the vowels repeated itself at cycles of exactly forty-two positions. When we noted this, Kully and I agreed that possibly Wenger was referring to the stepping pattern in the Japanese naval machine when he used the expression "greater that forty and less than fifty" in his conversation with Friedman.

Deciphering the Sixes

[p 120-121] We now felt that we understood the cryptography of the early use of the system well enough to solve any message enciphered by it. ... We decided to attack the current intercepts, ...

[p 122] Ou first aim was to recover the six-element subsequence by a statistical analysis of the relationships of the six letters we had identified as belonging to it. ...

Fortunately, before giving into this suspicion we decided to use a modification of the procedure by which we had recovered the order of the six vowels in the first message we had solved. The results were most gratifying, and form this point on it was all downhill sledding. Almost every test we applied worked beautifully, and by the middle of the afternoon we had reconstructed both subsequences and had successfully deciphered long passages of several of the intercepts.

Decryption of current traffic with paper analog

[p 123] We had been so preoccupied with the solution of the system that we had given no thought as to how we would decipher the intercepts after we had recovered the keys used for them. The first pratical scheme that occurred to use was to produce a "pencil and paper" analog of the device with which we could somewhat laboriously determine the plaintext equivalents of the cipher letters, ... Friedman expressed his opinion that the Japanese were using an electromechanical device, which automatically performed the enciphering and deciphering operations. He noted that he had been giving some thought to having a special apparatus constructed that would duplicate the operations of the Japanese device. He added that since there were no funds available to the Signal Corps for such purposes, it might be impossible to produce a satisfactory device in less than two or three years and that for the time being we would have to use the "pencil and paper" approach or whatever other makeshift arrangement we could devise.

Everyday Key Breaking

We have just described how R & K understood how the RED system worked and how they were able to reconstruct an emulation of this machine. Now we can ask ourselves: how did they break keys on a daily basis?

Rowlett's book tells us nothing on this subject. However, document VF-131-002 by Francis A. Raven, a Navy cryptanalyst, (see the References section of the home page) tells us that message keys were found using numerous stereotyped text sections.

We can therefore reconstruct the method used by R & K and their successors:

- Find the distribution between SIXES and TWENTIES (see above).

- Find the location of the Cribs deduced from stereotyped texts (see Cryptanalysis page).

- Deduce the plugboard configuration from the plain/crypto pairs deduced from the previous step.

- Find the rest of the key (the removed pins, the position of the half-rotors and the breakwheel, etc.)

Predicting Future Keys

Rowlett, from the beginning of his investigations into the RED machine, was interested in the keys used to potentially discover key reuse or rules that would allow the prediction of new ones.

Even before Kully and I solved the first RED machine message, I had started to keep a record of all significant information we had developed on the "five-digit indicator system" as we called it then. On section of this record was devoted to the "ten-day sequences" [plugboard] ... I spent some time studying the sequences we had recovered, hoping to find some characteristics which would enable me to tabulate the sequences exactly as they were given in the Japanese instructions. ... I began to notice that certain of the letters contained in the six-letter component of one ten-day sequence sometimes occupied similar relative positions in the six-letter component of another ten-day sequence. This observation led me to a reexamination of all the sequences we had recovered to determine if this or a similar relationship could be identified in the other sequences. As I pursued this line of investigation a logical pattern soon began to appear, and I discovered that by appropriately shifting the sequences against each other, I could fit certain of the sequences into a consistent pattern. When I had placed a number of the sequences into what seemed to be their proper relationship with each other, it took only a little further experimentation to discover what appeared to be a logical pattern which controlled the location of certain letters in the two sequences. By applying this pattern to other sequences, I was able to generate a family of sequences all of which possessed the same attributes. While I was generating this family of sequences, imagine my surprise and delight when I recognized one of the sequences as being identical to a sequence we had recovered in one of the earliest key periods that we had solved.

This starling discovery led me to examine each of the other sequences which I had not yet been able to fit into the logical pattern, and I was soon able to establish that after a certain date all the recent ten-day sequences which had been recovered could be found among the sequences which I had generated. ... [Friedman doubts Rowlett's conclusions] I got considerable peronal pleasure out of demonstrating to Friedman that my predictions were valid. I also got additional satisfaction out of promptly identifying the sequence used in the next key period by applying the text that I had devised.

Note: Deavours & Kruh's book (cf. References) describes the algorithm used by the Japanese to generate the Plugboard configurations.

References

Books & Articles

- The Story of Magic, by Frank B. Rowlett, Aegean Park Press, 1998.

- Machine Cryptography and Modern Cryptanalysis, by Cipher A. Deavours and Louis Kruh, Artech House, INC, 1985.

- Codebreaking with IBM machines in world war II, by Stephen Budiansky, Cryptologia, Vol 25, Issue 4 (2001).

Internet

- NSA - Solution of the Japanese RED Machine (draft) - Ref ID: A67330 - "Top Secret" but declassified (link).